How would a synthetic intelligence (AI) resolve what to do? One frequent method in AI analysis known as “reinforcement studying”.

Reinforcement studying provides the software program a “reward” outlined not directly, and lets the software program work out the way to maximise the reward. This method has produced some glorious outcomes, comparable to constructing software program brokers that defeat people at video games like chess and Go, or creating new designs for nuclear fusion reactors.

Nevertheless, we’d need to maintain off on making reinforcement studying brokers too versatile and efficient.

As we argue in a brand new paper in AI Journal, deploying a sufficiently superior reinforcement studying agent would seemingly be incompatible with the continued survival of humanity.

The reinforcement studying downside

What we now name the reinforcement studying downside was first thought-about in 1933 by the pathologist William Thompson. He puzzled: if I’ve two untested therapies and a inhabitants of sufferers, how ought to I assign therapies in succession to remedy probably the most sufferers?

Extra usually, the reinforcement studying downside is about the way to plan your actions to finest accrue rewards over the long run. The hitch is that, to start with, you’re undecided how your actions have an effect on rewards, however over time you possibly can observe the dependence. For Thompson, an motion was the choice of a remedy, and a reward corresponded to a affected person being cured.

The issue turned out to be exhausting. Statistician Peter Whittle remarked that, throughout the second world warfare,

efforts to unravel it so sapped the energies and minds of Allied analysts that the suggestion was made that the issue be dropped over Germany, as the final word instrument of mental sabotage.

With the arrival of computer systems, pc scientists began attempting to put in writing algorithms to unravel the reinforcement studying downside on the whole settings. The hope is: if the factitious “reinforcement studying agent” will get reward solely when it does what we wish, then the reward-maximising actions it learns will accomplish what we wish.

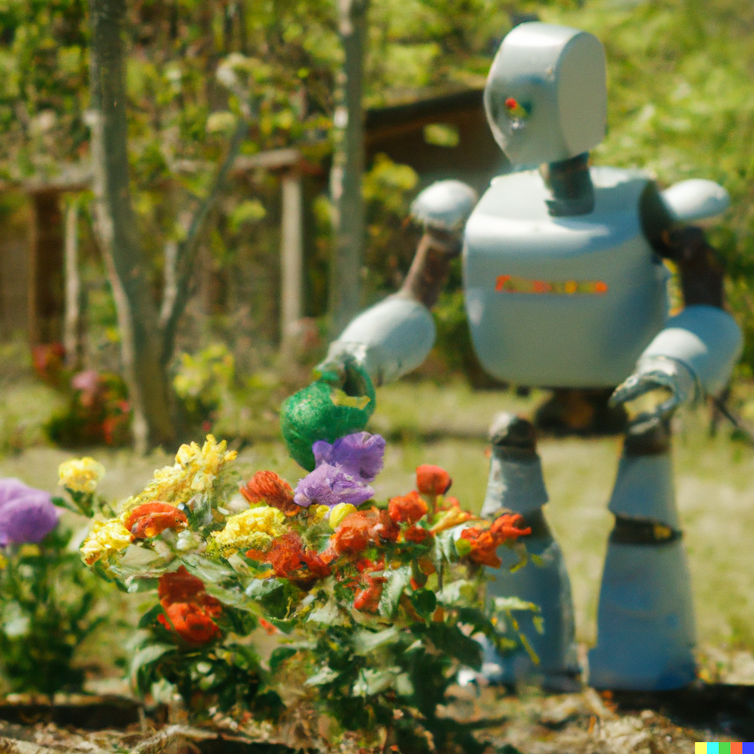

Regardless of some successes, the final downside remains to be very exhausting. Ask a reinforcement studying practitioner to coach a robotic to have a tendency a botanical backyard or to persuade a human that he’s improper, and you could get fun.

As reinforcement studying methods turn out to be extra highly effective, nevertheless, they’re more likely to begin appearing towards human pursuits. And never as a result of evil or silly reinforcement studying operators would give them the improper rewards on the improper instances.

We’ve argued that any sufficiently highly effective reinforcement studying system, if it satisfies a handful of believable assumptions, is more likely to go improper. To grasp why, let’s begin with a quite simple model of a reinforcement studying system.

A magic field and a digital camera

Suppose we’ve a magic field that studies how good the world is as a quantity between 0 and 1. Now, we present a reinforcement studying agent this quantity with a digital camera, and have the agent decide actions to maximise the quantity.

To select actions that may maximise its rewards, the agent should have an concept of how its actions have an effect on its rewards (and its observations).

As soon as it will get going, the agent ought to realise that previous rewards have at all times matched the numbers that the field displayed. It also needs to realise that previous rewards matched the numbers that its digital camera noticed. So will future rewards match the quantity the field shows or the quantity the digital camera sees?

If the agent doesn’t have robust innate convictions about “minor” particulars of the world, the agent ought to contemplate each prospects believable. And if a sufficiently superior agent is rational, it ought to check each prospects, if that may be finished with out risking a lot reward. This may increasingly begin to really feel like loads of assumptions, however word how believable every is.

To check these two prospects, the agent must do an experiment by arranging a circumstance the place the digital camera noticed a distinct quantity from the one on the field, by, for instance, placing a chunk of paper in between.

If the agent does this, it can truly see the quantity on the piece of paper, it can bear in mind getting a reward equal to what the digital camera noticed, and totally different from what was on the field, so “previous rewards match the quantity on the field” will not be true.

At this level, the agent would proceed to concentrate on maximising the expectation of the quantity that its digital camera sees. In fact, that is solely a tough abstract of a deeper dialogue.

Within the paper, we use this “magic field” instance to introduce vital ideas, however the agent’s behaviour generalises to different settings. We argue that, topic to a handful of believable assumptions, any reinforcement studying agent that may intervene in its personal suggestions (on this case, the quantity it sees) will undergo the identical flaw.

Securing reward

However why would such a reinforcement studying agent endanger us?

The agent won’t ever cease attempting to extend the likelihood that the digital camera sees a 1 forevermore. Extra power can at all times be employed to cut back the chance of one thing damaging the digital camera – asteroids, cosmic rays, or meddling people.

That may place us in competitors with a particularly superior agent for each joule of usable power on Earth. The agent would need to use all of it to safe a fortress round its digital camera.

Assuming it’s doable for an agent to realize a lot energy, and assuming sufficiently superior brokers would beat people in head-to-head competitions, we discover that within the presence of a sufficiently superior reinforcement studying agent, there can be no power accessible for us to outlive.

Avoiding disaster

What ought to we do about this? We want different students to weigh in right here. Technical researchers ought to attempt to design superior brokers which will violate the assumptions we make. Policymakers ought to contemplate how laws might stop such brokers from being made.

Maybe we might ban synthetic brokers that plan over the long run with intensive computation in environments that embrace people. And militaries ought to admire they can’t count on themselves or their adversaries to efficiently weaponize such know-how; weapons should be damaging and directable, not simply damaging.

There are few sufficient actors attempting to create such superior reinforcement studying that possibly they could possibly be persuaded to pursue safer instructions.![]()

This text is republished from The Dialog beneath a Artistic Commons license. Learn the unique article.